Introduction

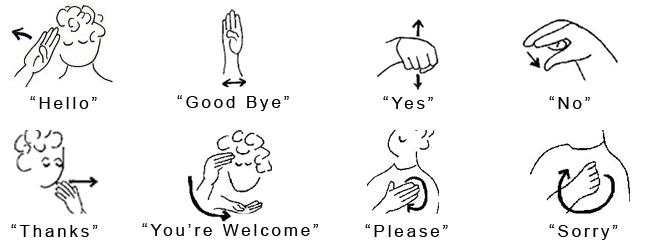

As part of Udacity AIND, it was required to build a system that can recognize words communicated using the American Sign Language (ASL). the preprocessed dataset of tracked hand and nose positions extracted from video was provided. Goal is be to train a set of Hidden Markov Models (HMMs) using part of this dataset to try and identify individual words from test sequences.

Also incorporating Statistical Language Models (SLMs) that capture the conditional probability of particular sequences of words occurring. This improves the recognition accuracy of the system.

Implementation

https://github.com/sunilp/sign-language-recognizer/blob/master/asl_recognizer.ipynb